Nice Info About Why Can Transformers Only Use AC

Current Transformer Output Ac Or Dc At Kathy Foley Blog

Unlocking the Mystery

1. The AC Advantage

Ever wondered why those trusty transformers humming away in substations and powering our devices can't just run on direct current (DC)? It's a question that pops up more often than you might think, and the answer is rooted in the very core of how transformers operate: magnetism! Think of a transformer as a love story between electricity and magnetism, but with a twist.

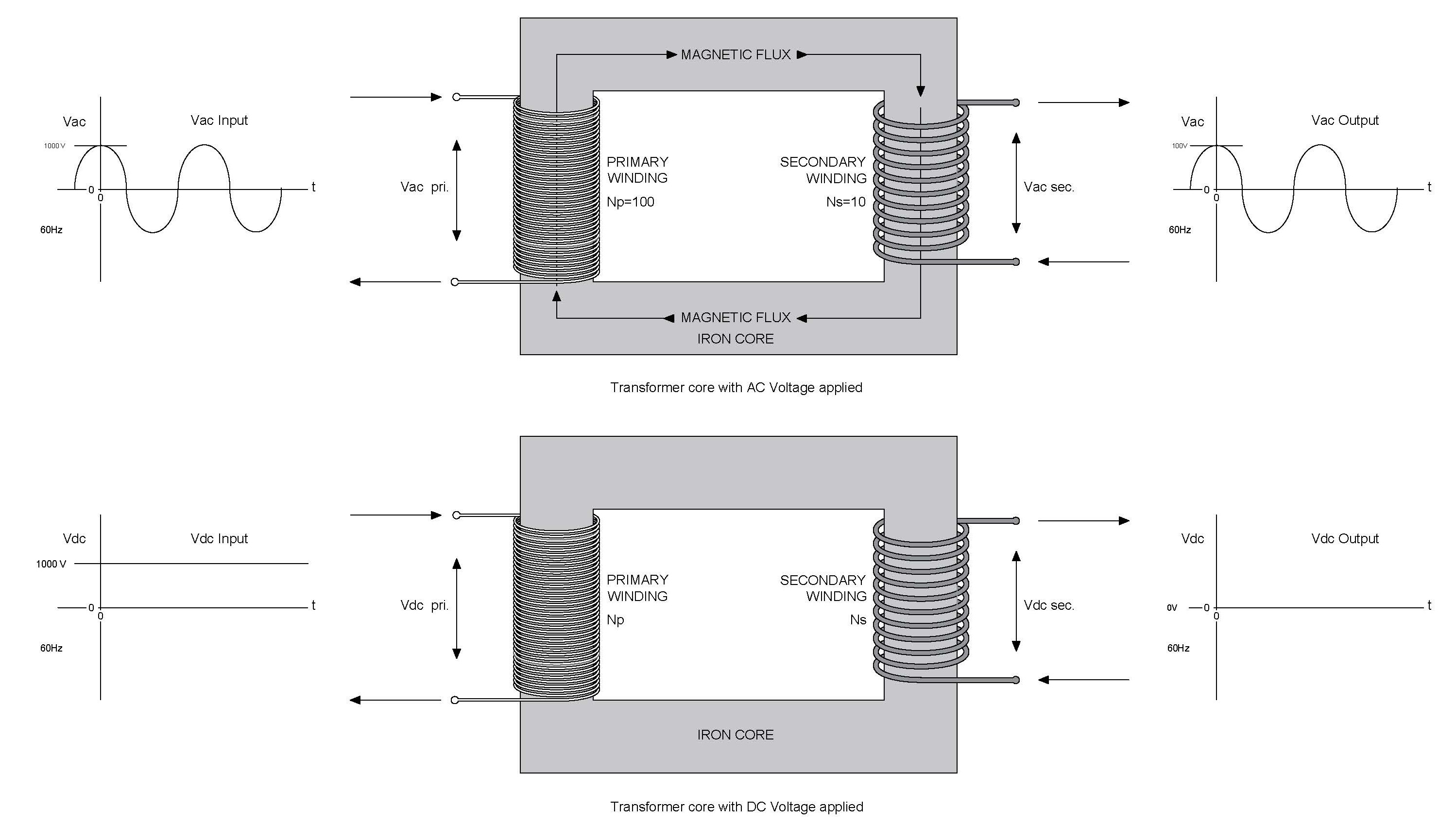

Transformers work on the principle of electromagnetic induction, which, in simple terms, means they use a changing magnetic field to induce a voltage in a secondary coil. This is how they step up or step down voltages without any direct electrical connection between the circuits. Now, here's the kicker: a changing magnetic field is key. DC, by its very nature, provides a steady, unchanging current. No change, no magnetic field variation, and therefore, no induced voltage. Its like trying to start a fire with a single, still log you need some movement, some action, to get things going!

Imagine a transformer as a set of coupled swings. One swing (the primary coil) gets pushed with AC, causing it to oscillate. This oscillation creates a moving magnetic field that then pushes the other swing (the secondary coil), transferring energy without any physical contact. DC, on the other hand, would be like pushing the first swing once and holding it there — the second swing would just sit there, unmoved and uninspired. Sad, really.

So, essentially, transformers require an alternating current because it provides the constantly fluctuating magnetic field necessary for electromagnetic induction to work. Without that fluctuation, the whole system grinds to a halt. Think of it as the transformer's way of saying, "Gimme some rhythm!"

Types, Applications, And Benefits Of Isolation Transformers

The Role of Electromagnetic Induction

2. Faraday's Law and the AC Connection

To dive a bit deeper, let's bring in the big guns — or rather, the big law: Faraday's Law of Electromagnetic Induction. This law states that the induced electromotive force (EMF), or voltage, in any closed circuit is equal to the negative of the time rate of change of the magnetic flux through the circuit. Sounds complicated? It is, a little. But in essence, it means that the faster the magnetic field changes, the greater the induced voltage.

Now, AC happily provides that constantly changing magnetic flux because, well, it's alternating! Its current is constantly rising and falling, reversing direction periodically. This translates directly into a magnetic field that's perpetually in motion, a swirling vortex of magnetic energy that can readily induce voltage in the secondary coil. It's like a constant wave of opportunity for the transformer to do its job.

With DC, the magnetic field, once established, remains constant. There's no rate of change, no fluctuating flux, and hence, no induced voltage in the secondary coil. It's a magnetic stalemate, a still life that simply won't generate any electrical activity on the other side of the transformer. Think of it as a really boring magnetic field, one that doesn't inspire any electrical excitement.

Therefore, Faraday's Law provides the scientific underpinning for why transformers rely solely on AC power. It's not just a preference; it's a fundamental requirement dictated by the laws of physics. Trying to run a transformer on DC is like trying to power a windmill with still air — it just ain't gonna happen.

Why Not Just Use DC Transformers?

3. A Practical Consideration

Okay, so AC is the clear winner for traditional transformers, but what about designing a "DC transformer"? Surely, with enough engineering ingenuity, we could find a way around these limitations, right? Well, theoretically, yes, but practically, it becomes incredibly complex and inefficient.

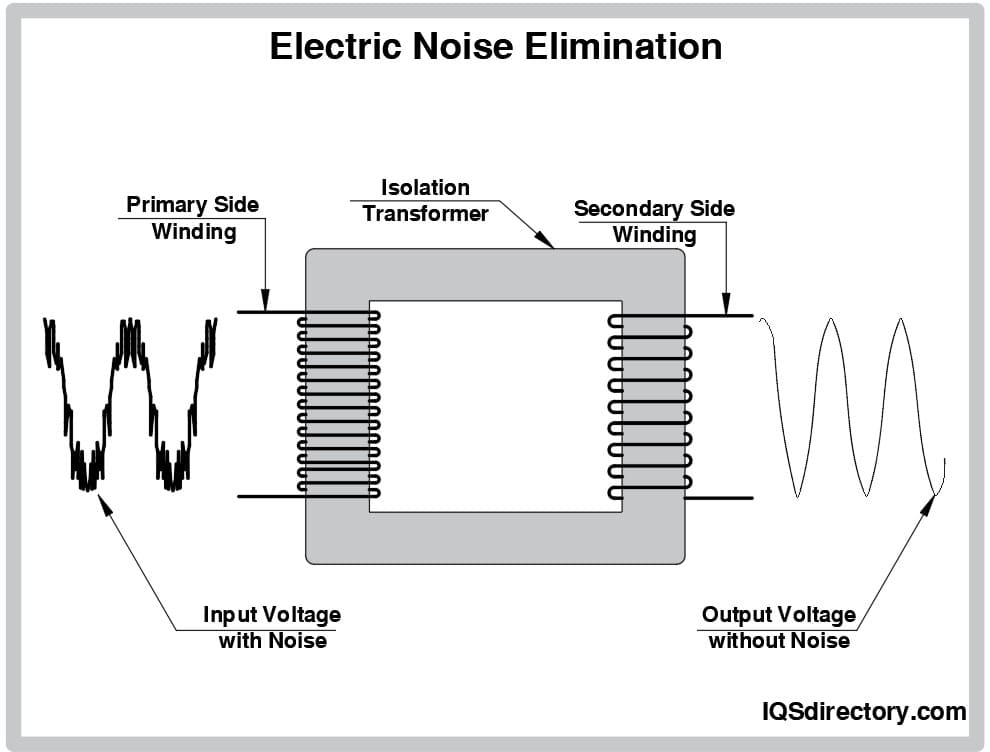

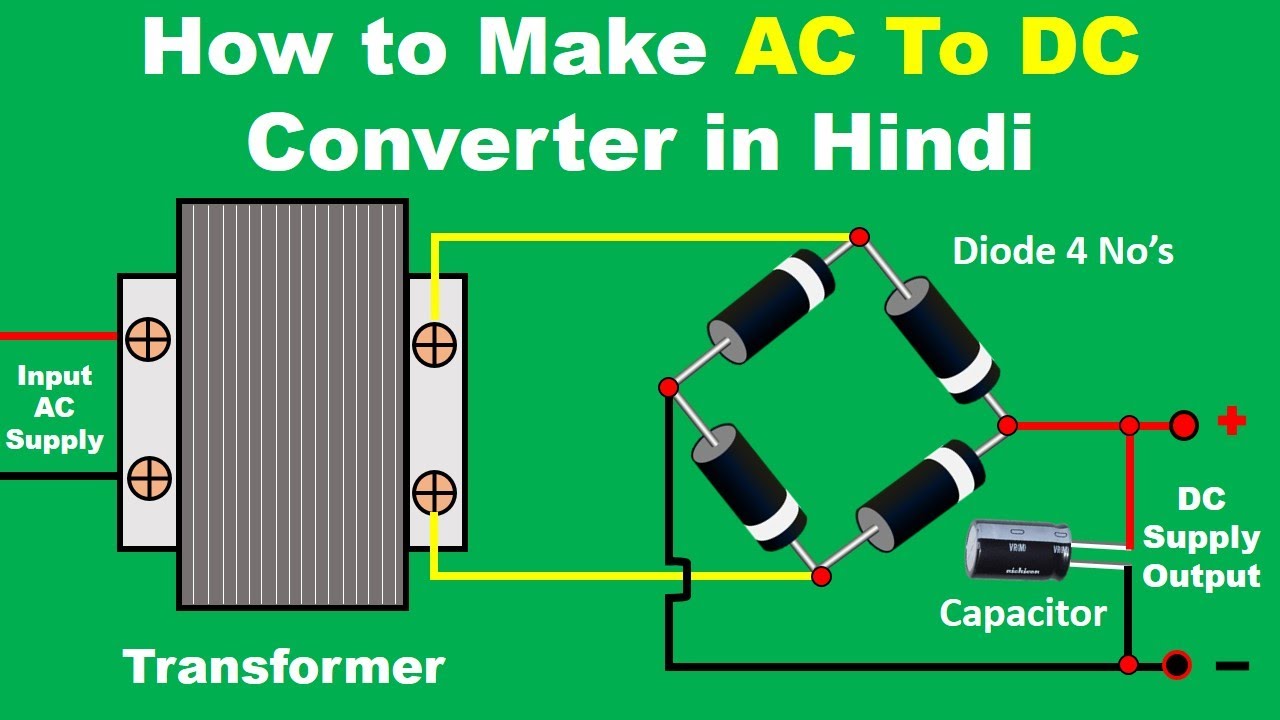

One potential workaround is to use a DC-to-AC converter followed by a traditional transformer and then an AC-to-DC converter, if you need DC at the end. However, this approach introduces significant losses in efficiency due to the multiple conversions involved. Each conversion step inevitably results in some energy being lost as heat, making the whole process highly wasteful. It's like playing a game of telephone, but instead of garbled information, you're losing energy with each transmission. Not ideal.

Another approach involves using electronic switching circuits to rapidly interrupt the DC current, creating a pulsed DC that mimics some aspects of AC. While this can work to some extent, it requires complex and expensive circuitry, and the resulting performance is often inferior to that of a traditional AC transformer. It's like trying to teach a cat to play the piano — technically possible, but incredibly difficult and the results might not be exactly harmonious.

Ultimately, the simplicity, efficiency, and cost-effectiveness of AC transformers make them the preferred choice for most applications. While DC-DC converters exist, they operate on different principles and are typically used for lower power applications where the disadvantages are less pronounced. So, while a "DC transformer" might sound like a cool idea, the practical realities make it a less attractive option.

The Frequency Factor

4. AC Frequency and Transformer Performance

The frequency of the AC current plays a significant role in the design and performance of transformers. Remember that changing magnetic field? Well, the speed at which that field changes (the frequency) directly impacts how efficiently the transformer operates. A higher frequency generally allows for a smaller and lighter transformer for a given power rating. Think of it like shaking a maraca — the faster you shake, the more sound you get.

Higher frequencies reduce the required inductance of the transformer windings, which, in turn, reduces the size and weight of the core. This is particularly important in applications where size and weight are critical, such as in portable electronic devices. However, there are also trade-offs to consider. Higher frequencies can lead to increased losses due to factors like skin effect and core losses. Skin effect causes the current to flow mainly on the surface of the conductor, increasing resistance, while core losses are due to hysteresis and eddy currents within the transformer core.

Transformer designers carefully consider these factors when selecting the operating frequency. The choice depends on the specific application and the desired balance between size, weight, efficiency, and cost. For example, power distribution transformers typically operate at relatively low frequencies (50 or 60 Hz) to minimize core losses and maximize efficiency, while transformers used in switching power supplies often operate at much higher frequencies (tens of kHz or even MHz) to reduce size and weight.

So, while transformers can only use AC, the specific frequency of that AC is a crucial design parameter that significantly impacts their overall performance. It's like choosing the right seasoning for a dish — it can make all the difference!

Types, Applications, And Components Of Electric Transformers

Practical Applications and Real-World Examples

5. Transformers in Action

We've talked about the theory behind why transformers need AC, but let's bring it down to earth with some real-world examples. Think about the journey of electricity from the power plant to your home. Power plants generate electricity at relatively low voltages, but transmitting it over long distances at these voltages would result in significant energy losses due to resistance in the transmission lines. This is where transformers come to the rescue.

Step-up transformers at the power plant increase the voltage to hundreds of thousands of volts for efficient long-distance transmission. This reduces the current and minimizes energy losses. Along the way, substations use step-down transformers to gradually reduce the voltage to levels suitable for distribution to homes and businesses. Finally, a transformer near your home steps the voltage down to the standard voltage used in your appliances (e.g., 120V or 240V).

But transformers aren't just used in large-scale power distribution. They're also found in countless everyday devices, from your laptop charger to your microwave oven. These smaller transformers perform similar voltage transformations, adapting the incoming voltage from the wall outlet to the specific requirements of the device. Without transformers, our modern electrical grid and many of our electronic devices simply wouldn't be possible.

In essence, transformers are the unsung heroes of the electrical world, silently and efficiently transforming voltages behind the scenes. They're a testament to the power of electromagnetic induction and a crucial component of our modern technological infrastructure. So, the next time you plug in your phone, take a moment to appreciate the humble transformer and its reliance on the rhythmic dance of AC power.