Who Else Wants Tips About What Are The Three 3 Main Problems In Concurrency Control

PPT Concurrency Control And Recovery PowerPoint Presentation, Free

Navigating the Tricky Waters of Concurrency Control

1. The Concurrency Conundrum

Imagine a bustling kitchen where multiple chefs are preparing different dishes, all using the same ingredients and tools. Sounds chaotic, right? That's kind of what concurrency control is all about in the world of databases and software systems. It's the art of managing multiple processes accessing and modifying shared data at the same time, without causing a complete meltdown. Think of it as traffic control for data, making sure everything flows smoothly and arrives at its destination in one piece.

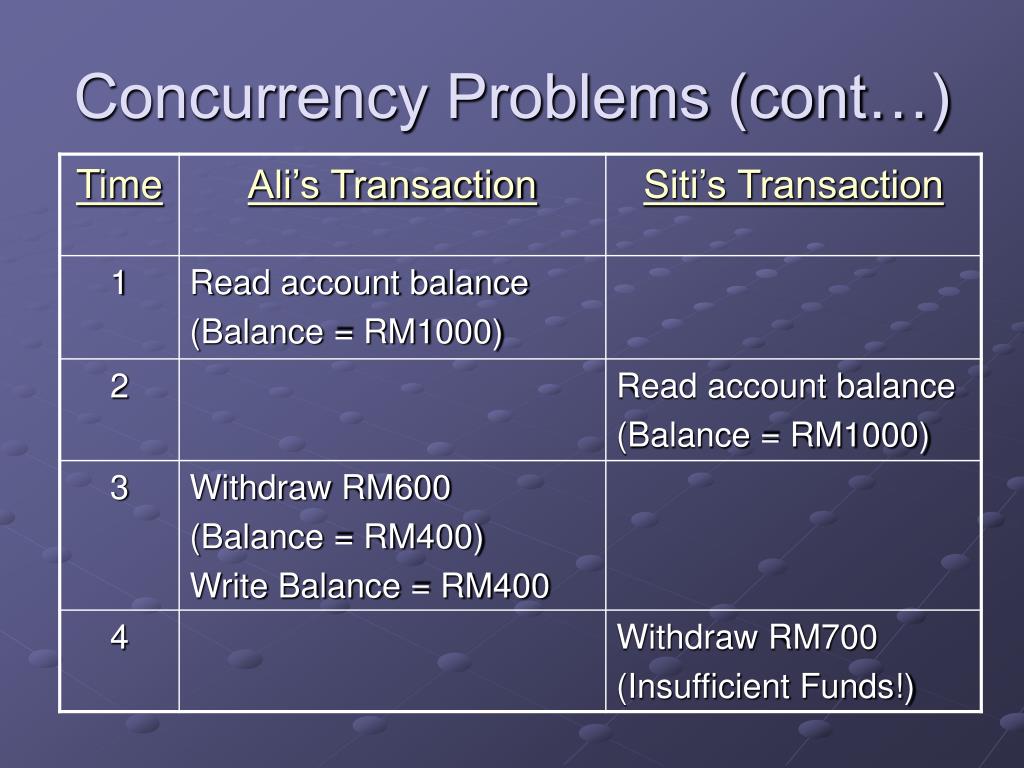

Now, why is this so important? Well, without proper concurrency control, you might end up with some serious data mishaps. Imagine a scenario where two people try to book the last seat on a flight simultaneously. If the system isn't carefully managed, both might get confirmation, leading to an overbooking nightmare. Or picture trying to update your bank balance while the system is processing a transaction. If those actions aren't properly coordinated, you might end up with the wrong amount in your account. Not ideal!

In essence, concurrency control is about maintaining data integrity and consistency in a multi-user environment. It's about ensuring that transactions are executed in a reliable and predictable manner, even when multiple users are hitting the system simultaneously. Its a fundamental challenge in computer science, and understanding its core problems is key to building robust and dependable systems.

So, what are these core problems, these potential pitfalls that can lead to data chaos? Let's dive in and explore the three main culprits that keep concurrency control experts up at night. Get ready to meet Lost Updates, Dirty Reads, and Inconsistent Retrievals — the terrors of concurrent data access!

The Three Horsemen of the Data Apocalypse

2. Decoding the Demons

Alright, let's get down to the specifics. When we talk about the main problems in concurrency control, we're really talking about three classic scenarios that can wreak havoc on your data: Lost Updates, Dirty Reads, and Inconsistent Retrievals. These are like the monster-under-the-bed scenarios that every database administrator and software developer dreads.

Let's begin with the Lost Update problem. Imagine two people, Alice and Bob, both trying to update the quantity of widgets in stock. Alice reads the current value (let's say it's 10), subtracts 3 because a customer bought three widgets, and prepares to update the database. At the same time, Bob also reads the initial value of 10, subtracts 2 because another customer bought two widgets, and prepares to update. If Bob's update happens after Alice reads the value but before she writes it, and then Alice's update overwrites Bob's update, the final quantity in the database will be 7 (10 - 3), effectively losing Bob's update. Oops! Those two widgets are now mysteriously gone, and your inventory system is a liar.

Next up is the Dirty Read. This happens when one transaction reads data that has been modified by another transaction, but that second transaction hasn't committed yet (meaning it might still be rolled back). So, imagine Transaction A updates a customer's address but hasn't committed the change yet. Transaction B then reads this updated address. If Transaction A later rolls back its changes (maybe the customer provided incorrect information), Transaction B now has incorrect (dirty) data. The customer's mail will be delivered to the wrong place thanks to this mishap.

Finally, we have the Inconsistent Retrieval problem. This occurs when a transaction reads different values for the same data during its execution because another transaction is updating the data concurrently. Think of it like this: you're trying to get a consistent snapshot of a bank account balance by summing all the transactions. But while you're adding up the debits and credits, another transaction is transferring money out of the account. You might end up with a balance that doesn't accurately reflect the true state of the account, either higher or lower. This is also known as non-repeatable read.

Lost Updates

3. How Updates Vanish into Thin Air (and What to Do About It)

Weve already touched on the Lost Update problem, but its worth digging a little deeper. Its a sneaky issue, because it doesn't always cause an outright error message. Instead, data just quietly disappears, leaving you scratching your head and wondering where it went. Its like a mischievous gremlin sneaking into your database and erasing bits of information when you least expect it.

Imagine an online multiplayer game where two players simultaneously try to pick up the same power-up. Both players clients send a request to the server indicating their intention to grab the item. Without proper concurrency control, the server might process both requests, granting the power-up to both players even though it should only be available to one. This leads to an unfair advantage and a less-than-ideal gaming experience.

The root cause of Lost Updates often lies in the read-modify-write cycle. A process reads the current value, modifies it, and then writes the updated value back to the database. If two processes perform this cycle concurrently without synchronization, the updates can collide and one of them will be lost. It's like two trains heading towards each other on the same track — disaster is bound to strike.

There are several ways to prevent Lost Updates. One common approach is to use locking mechanisms. This involves acquiring a lock on the data before reading it, ensuring that no other process can modify it until the lock is released. Another technique is optimistic locking, which involves checking if the data has been modified since it was last read. If it has, the update is rejected, preventing the loss of data. Think of locking as a temporary barrier around your data, preventing conflicting changes.

Dirty Reads

4. The Perils of Reading Uncommitted Data

Dirty Reads are like reading a draft of a document that's still being edited — you might get some information, but it could be completely wrong by the time the final version is published. In the world of databases, a Dirty Read occurs when one transaction reads data that has been modified by another transaction, but that second transaction hasn't committed its changes yet. This means the data could still be rolled back, leaving the first transaction with incorrect information.

Imagine an e-commerce website where a customer places an order. The system updates the inventory count to reflect the purchased items, but the order hasn't been confirmed yet (perhaps the customer's payment failed). Another customer comes along and sees the updated inventory count, thinking the items are no longer available. They might decide to buy something else, even though the original order could be cancelled and the items would become available again. This could lead to lost sales and frustrated customers.

The problem with Dirty Reads is that they can lead to cascading errors. If a transaction relies on incorrect data from a Dirty Read, it might make incorrect decisions and propagate those errors throughout the system. It's like starting a rumor based on false information — it can quickly spread and cause a lot of confusion.

To prevent Dirty Reads, databases typically use isolation levels. These levels define the degree to which transactions are isolated from each other. The higher the isolation level, the more protection you have against Dirty Reads. For example, a read committed isolation level prevents a transaction from reading uncommitted data, ensuring that it only sees data that has been officially saved to the database. It's like having a filter that only shows you the finalized version of the document, preventing you from being misled by the drafts.

Inconsistent Retrievals

5. When Your Data Refuses to Hold Still

Inconsistent Retrievals, also known as non-repeatable reads, happen when a transaction reads the same data multiple times during its execution, but gets different values each time because another transaction is modifying the data concurrently. It's like trying to take a picture of a moving object — the image keeps changing, and you never get a clear, consistent shot.

Consider a financial reporting system that needs to calculate the total assets of a company. It reads the balances from various accounts and sums them up. However, while the system is reading these balances, another transaction is transferring money between accounts. As a result, the system might read some balances before the transfer and some after, leading to an inaccurate total assets figure. This could result in incorrect financial reports and misleading business decisions.

The danger with Inconsistent Retrievals is that they can lead to incorrect calculations and analyses. If a transaction relies on consistent data to make decisions, getting different values each time can lead to flawed conclusions. It's like trying to solve a puzzle with pieces that keep changing shape — you'll never be able to complete it.

To address Inconsistent Retrievals, databases offer isolation levels that provide repeatable reads. These levels ensure that a transaction sees a consistent snapshot of the data throughout its execution, regardless of any concurrent modifications. This is often achieved through techniques like locking or multi-version concurrency control (MVCC), which allows each transaction to work with its own consistent version of the data. Its like having a time machine that lets you freeze the data at a specific point in time, ensuring a consistent view.

PPT Operating Systems PowerPoint Presentation, Free Download ID2975473

FAQ

6. Your Burning Questions Answered

Concurrency control can feel a bit like navigating a maze. So, let's tackle some frequently asked questions to help you find your way.

Q: What's the best way to handle concurrency control?A: There's no single "best" way; it depends on your specific needs and the characteristics of your application. Factors to consider include the level of concurrency, the sensitivity of the data, and the performance requirements. Common techniques include locking, optimistic locking, and multi-version concurrency control (MVCC). The right approach is to choose a concurrency strategy that best fits your database and application.

Q: Are isolation levels always the answer?A: Isolation levels are powerful tools for managing concurrency, but they can also impact performance. Higher isolation levels provide more protection against concurrency problems, but they can also reduce the level of concurrency and increase the overhead of transactions. It's a trade-off — you need to find the right balance between data integrity and performance.

Q: How do I know if I have concurrency control problems in my application?A: Look for symptoms like data inconsistencies, unexpected errors, and performance bottlenecks. Carefully examine your application's logs and monitor database activity to identify potential concurrency issues. If you suspect a problem, try to reproduce it in a controlled environment and analyze the sequence of events that lead to the error. Proper testing is key!